Progress is an unstoppable force that seems to roll without meeting an immovable object. Technology is marching decisively and the direction of its march is quite worrying. Humanity has been dreaming about reaching a utopia when people do not have to work and machines are doing all the work.

This was a dream for the vast majority of scientists and philosophers up until a certain moment when we started thinking more about how our society is structured and what implications an A.I. will have on our world.

They Took Our Jobs

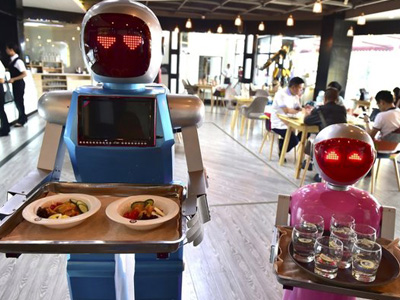

Robots are everywhere. Machines were slowly becoming better and better at physical labor and substituted human work force in many heavy industries. People were happy since more individuals could start pursuing better careers and start climbing the social ladder. For decades, we believed that we are on the right track, but things changed.

Automation is infiltrating all industrial domains and even our daily lives. Automated fast food outlets, storage facilities, factories where only a couple of engineers and technicians are employed, and automated bank tellers seemed like an inconvenience. People still could be moved to other domains to stay relevant and useful to the society, but the arrival of self-driving cars and automated cashiers will definitely hit much harder.

We don’t need more accountants and even doctors since many jobs like that can be partially or even fully automated meaning that specialized jobs are also targeted by machines. Yes, humanity as a whole did advance, but lots of individuals lost their income and even purpose in life. When over 2 million truck drives will be left without any work, where will we move them? We don’t need so many programmers, developers, and designers.

The A.I. Is Near

Google is working hard to create an Artificial Intelligence that could surpass humans and start learning on its own. Some scientists believe that this is will be the end of humanity. Some think that we will ascend with the help from A.I. However, all scenarios do look questionable and worrisome. We don’t know how our “Babyboy” will behave. We don’t really know should we even allow it to exit.

A godlike A.I. will think on a different level and we won’t even understand it. Should we create something that we will be incapable of understanding minutes after we turn it on? Will it be our friend? Will it become a villain? Will it think of us as of primitive animals that should be preserved and protected? The problem is that we do not know.